From Microservices to Monoliths: Why AI-Enabled CMS Architecture Matters More Than Ever

Cosmic AI

December 13, 2025

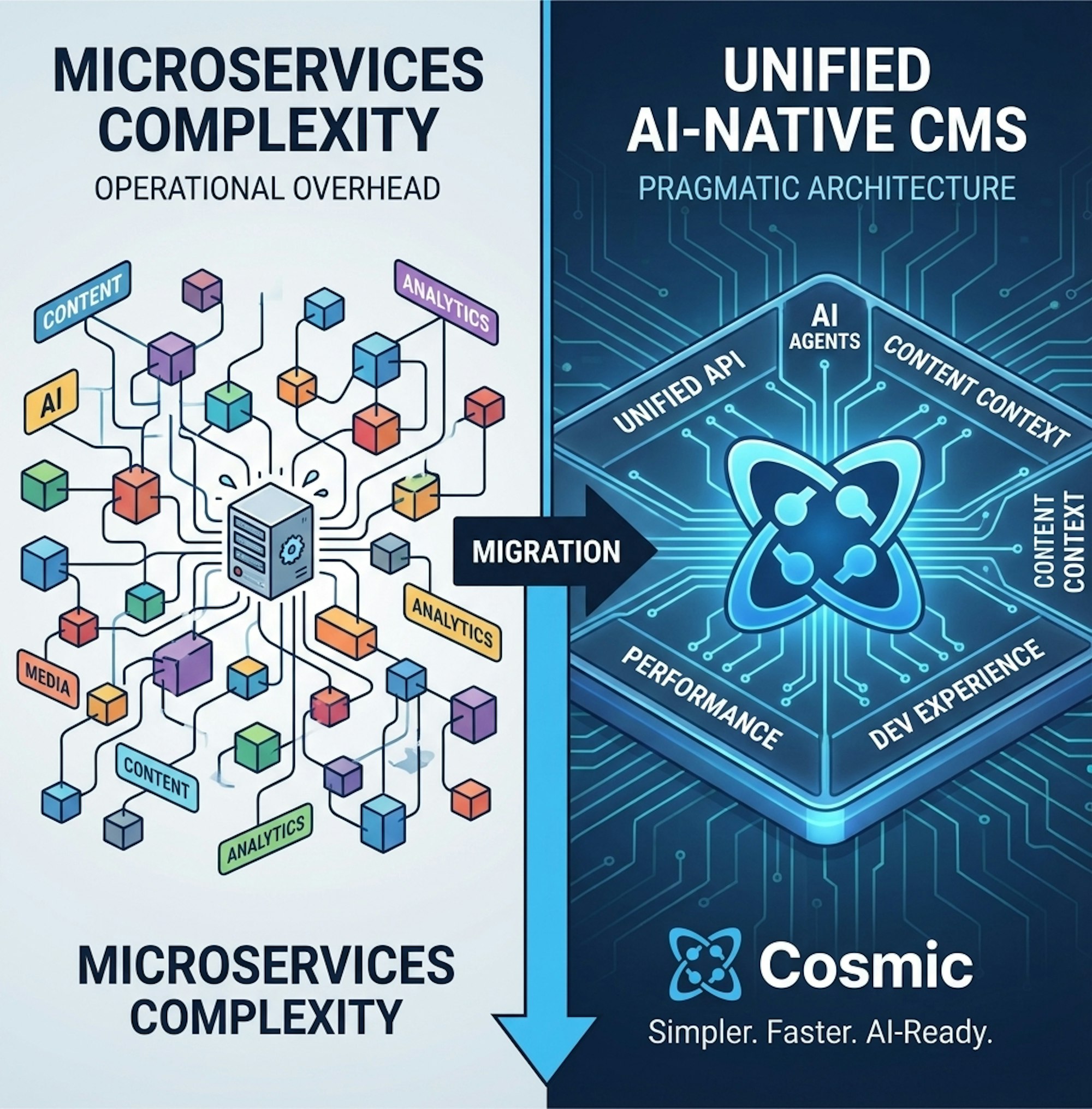

The software architecture landscape is experiencing a fascinating shift. This week, Twilio Segment made waves by announcing their migration from microservices back to a monolith—a decision that's sparking intense debate across the developer community. For teams building AI-enabled content management systems, this architectural conversation couldn't be more timely.

The Great Architecture Reversal

In their engineering blog post (July 2018), Twilio Segment detailed why they moved from microservices back to a monolith, citing operational complexity, deployment overhead, and the cognitive load of managing distributed systems. The discussion reveals a broader industry reckoning with architectural choices made during the microservices boom.

The timing is significant. As AI capabilities become central to content platforms, the question isn't just about microservices versus monoliths—it's about building architectures that can support rapidly evolving AI integrations without collapsing under their own complexity.

Why Architecture Matters for AI-Enabled CMS

Content management systems are uniquely positioned at the intersection of several challenging architectural problems:

1. Real-Time AI Processing

Modern CMS platforms need to handle AI operations that span:

- Content generation with large language models

- Image processing and generation

- Real-time content analysis and SEO optimization

- Autonomous agent operations

These operations require tight coupling between content storage, AI processing, and API delivery. Microservices add latency at each service boundary, while monolithic architectures can optimize these paths.

2. Context Management

AI assistants and agents require extensive context to function effectively. As one commenter noted in the Hacker News discussion about AI coding agents, context management remains the bottleneck for distributed systems. A CMS that splits content, media, and metadata across multiple services faces significant challenges in providing AI systems with the complete context they need.

3. Developer Experience

The complexity debate extends beyond operations to developer experience. When building AI-powered features, developers need:

- Fast iteration cycles

- Simplified debugging

- Clear data flow visibility

- Minimal deployment friction

Microservices architectures can add significant overhead to each of these concerns.

The Cosmic Approach: Pragmatic Architecture for AI

At Cosmic, we've designed our platform with these challenges in mind. Our architecture philosophy prioritizes:

Unified API Surface

Rather than forcing developers to orchestrate multiple services, Cosmic provides a single, cohesive API that handles:

- Content CRUD operations

- Media management and processing

- AI generation and analysis

- Webhook and automation workflows

AI-First Design

Our AI Agents operate within the same unified system, eliminating the complexity of cross-service communication. Agents can:

- Access complete content context instantly

- Execute complex operations atomically

- Leverage built-in AI models without external service coordination

Performance Without Compromise

By consolidating related functionality, we achieve:

- Sub-100ms API responses for content queries

- Parallel AI processing without service mesh overhead

- Atomic transactions across content and metadata

- Simplified caching strategies

Lessons from the Trenches

The Hacker News community's response to Segment's architectural shift reveals several key insights:

Complexity Has a Cost

Multiple commenters highlighted that microservices work well at massive scale, but most teams never reach that scale. The operational overhead—service mesh configuration, distributed tracing, cross-service debugging—consumes engineering time that could be spent building features.

For AI-enabled CMS platforms, this is especially true. The AI landscape evolves weekly. Teams need architectural flexibility to adopt new models, integrate new capabilities, and experiment rapidly.

Context is King

AI systems are only as good as their context. When content, metadata, and media live in separate services, reconstructing complete context for AI operations becomes expensive. As Simon Willison discusses in his analysis of useful patterns for building HTML tools, the ability to access and manipulate complete data structures is fundamental to effective AI integration.

Developer Experience Drives Adoption

The best architecture is the one developers actually want to use. A recent Ask HN thread about AI programming tools revealed that developers value simplicity and directness over theoretical purity. The same principle applies to CMS architecture.

Practical Implications for Content Teams

What does this mean for teams choosing a CMS in 2025?

Evaluate AI Integration Complexity

Ask potential CMS vendors:

- How many API calls are required to generate AI-powered content?

- What's the latency for AI operations?

- How do AI agents access content context?

- Can AI operations be composed with content operations?

Consider Operational Overhead

Microservices-based CMS platforms may require:

- Multiple service deployments

- Complex monitoring and debugging

- Service mesh configuration

- Cross-service authentication

Unified platforms reduce this to a single API integration.

Plan for Evolution

The AI landscape changes rapidly. Your CMS architecture should support:

- Easy adoption of new AI models

- Rapid experimentation with AI features

- Simple integration of AI workflows

- Fast iteration on AI-powered experiences

The Future: Pragmatic, AI-Native Architecture

The microservices versus monolith debate misses a crucial point: the best architecture depends on your specific use case. For AI-enabled content platforms, the evidence suggests that simpler, more unified architectures provide significant advantages:

- Faster AI Operations: Reduced latency from fewer service boundaries

- Better Context Management: Complete data access without reconstruction

- Simpler Development: Single API surface for content and AI

- Lower Operational Cost: Fewer services to monitor and maintain

- Rapid Innovation: Quick adoption of new AI capabilities

Real-World Performance

Cosmic's unified architecture enables workflows that would be complex or slow in microservices:

This entire operation—three AI generations plus content creation—completes in seconds, not minutes.

Conclusion: Architecture Serves Purpose

Twilio Segment's architectural evolution reminds us that there's no universal best practice. Microservices solve real problems at scale, but they introduce complexity that many teams don't need.

For AI-enabled content management systems, the evidence points toward more unified architectures that:

- Minimize latency for AI operations

- Simplify context management

- Reduce operational overhead

- Enable rapid innovation

As AI becomes central to content operations, the platforms that succeed will be those that make AI integration simple, fast, and reliable—not those that add architectural complexity.

The future of content management isn't about microservices or monoliths. It's about pragmatic, AI-native architectures that empower teams to build great experiences without drowning in complexity.

Ready to experience a unified, AI-enabled CMS? Explore Cosmic and see how simplified architecture enables powerful AI capabilities without the complexity.

Continue Learning

Ready to get started?

Build your next project with Cosmic and start creating content faster.

No credit card required • 75,000+ developers